📌 Introduction

PySpark, a framework for big data processing, has revolutionised the way we handle massive datasets. At its core lies the Resilient Distributed Dataset (RDD), the fundamental building block for distributed computing. Let’s break it down and uncover its brilliance! 🌟

📌 What is an RDD?

An RDD is PySpark’s distributed collection of data spread across multiple nodes in a cluster, allowing parallel computation. Think of it as a magical data structure that enables distributed processing while being:

1️⃣ Immutable: Once created, it cannot be modified.

2️⃣ Fault-Tolerant: Automatically recovers lost data through lineage.

🌟 Key Features of RDDs

1️⃣ Partitioning for Parallelism

RDDs are divided into smaller partitions 🧩, distributed across nodes, ensuring efficient parallel execution.

2️⃣ Fault Tolerance

If a node crashes 🚨, RDDs can rebuild data using lineage graphs 🛠️, making them robust for large-scale applications.

3️⃣ Lazy Evaluation

Operations on RDDs are not executed immediately. Instead, transformations are lazy, optimizing the computation pipeline for better performance ⚙️.

4️⃣ In-Memory Computation

RDDs often store data in memory 🧠 for faster access, reducing disk I/O and speeding up processing.

🛠️ Operations on RDDs

RDDs support two types of operations:

🔄 Transformations (E.g., map(), filter())

These are lazy operations that define a computation pipeline without executing it immediately.

- Example:

rdd.map(lambda x: x * 2)doubles each element in the RDD.

✅ Actions (E.g., collect(), count())

These trigger execution and return results.

- Example:

rdd.collect()retrieves all elements from the RDD.

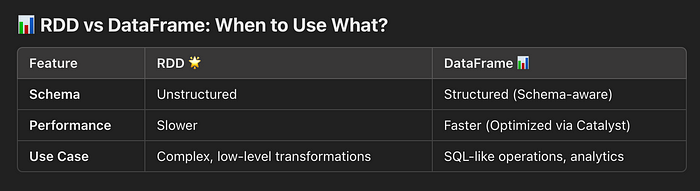

✅Difference b/w RDD and Data Frame

🌈 Why RDDs Still Matter?

While DataFrames and Datasets are now preferred for most tasks due to better performance, RDDs remain indispensable for:

🔸 Low-level transformations.

🔸 Complex custom data processing.

🔸 Working with unstructured data.

🚀 Conclusion

RDDs form the bedrock of PySpark’s distributed computing model. While newer abstractions like DataFrames bring ease and efficiency, RDDs empower developers with fine-grained control over data and computations. Whether you’re building machine learning pipelines 🤖 or crunching big data 📊, understanding RDDs is your first step towards PySpark mastery.